/ Harnessing Machine Learning to Detect To...

By Evan Dangol

07 Feb 2024

09

35

This is the simple console application in c#.

In today's digital age, online platforms are bustling hubs of interaction. While these platforms foster communication and connection, they also unfortunately attract toxic behavior, such as hate speech and harassment. However, thanks to advancements in technology, particularly in the realm of machine learning, we now have powerful tools at our disposal to combat toxicity effectively.

In this blog post, I'll delve into the realm of machine learning and demonstrate how to harness its capabilities within a C# console application to detect toxic comments. Specifically, we'll utilize the Calibrated Binary Classification Metrics module to achieve accurate and calibrated toxicity classification.

Machine learning provides a promising solution to the challenge of identifying toxic comments. By training models on labeled datasets containing examples of toxic and non-toxic comments, we can teach our system to recognize patterns indicative of toxicity.

The Calibrated Binary Classification Metrics module is a powerful tool in our machine learning arsenal. It enables us to evaluate the performance of our toxicity detection model with calibrated metrics, ensuring reliable and accurate predictions.

Data Collection: Obtain a dataset of labeled comments, where each comment is annotated as toxic or non-toxic.

Data Preprocessing: Clean and preprocess the text data, removing noise and irrelevant information.

Feature Extraction: Extract relevant features from the text data, such as word frequencies or embeddings.

Model Training: Utilize the Calibrated Binary Classification Metrics module to train a machine learning model on the labeled dataset.

Evaluation: Evaluate the performance of the trained model using calibrated metrics to ensure reliable toxicity classification.

Deployment: Deploy the trained model within our C# console application, allowing users to input comments and receive toxicity predictions in real-time.

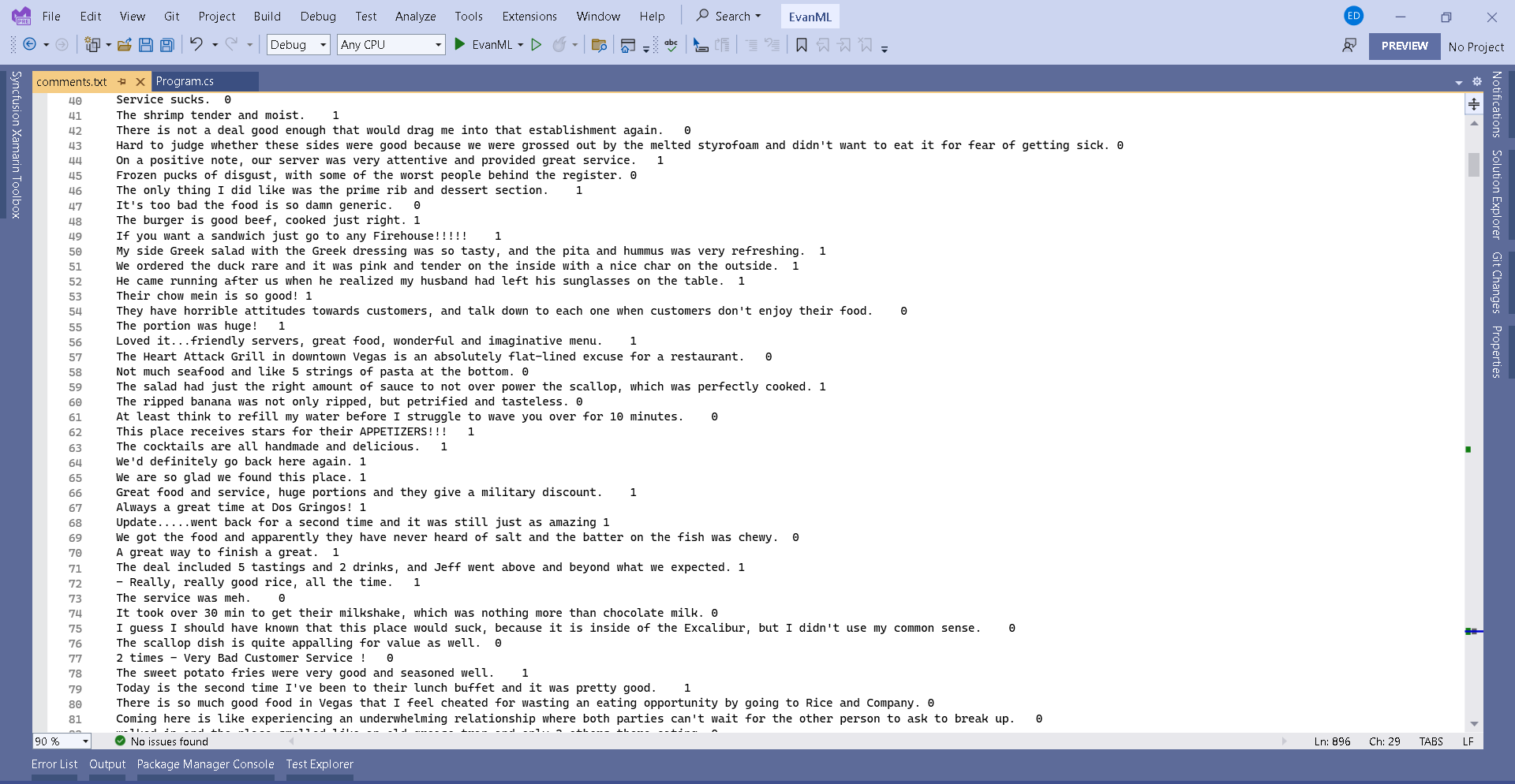

For data collections I have used "Data Download" link to download some model data.

Excerpt from comments.txt

Plantronics Bluetooth Excelent Buy. 1

I came over from Verizon because cingulair has nicer cell phones.... the first thing I noticed was the really bad service. 0

I'll be looking for a new earpiece. 0

I highly recommend this device to everyone! 1

Jawbone Era is awesome too! 1

We received a WHITE colored battery that goes DEAD after a couple hoursTHe original used to last a week - but still lasts longer than thereplacement 0

After a year the battery went completely dead on my headset. 0

I have used several phone in two years, but this one is the best. 1

An Awesome New Look For Fall 2000!. 1

This is the first phone I've had that has been so cheaply made. 0

Att is not clear, sound is very distorted and you have to yell when you talk. 0

The plastic breaks really easy on this clip. 0

Price is good too. 1

Don't make the same mistake I did. 0

Oh and I forgot to also mention the weird color effect it has on your phone. 0

I'm using it with an iriver SPINN (with case) and it fits fine. 1

Also the area where my unit broke).- I'm not too fond of the magnetic strap. 0

Overall, I am psyched to have a phone which has all my appointments and contacts in and gets great reception. 1

every thing on phone work perfectly, she like it. 1

Another note about this phone's appearance is that it really looks rather bland, especially in the all black model. 0

Create a Model class and write the code

using Microsoft.ML.Data;

public class CommentData

{

[LoadColumn(0)]

public string? Comment;

[LoadColumn(1), ColumnName("Label")]

public bool IsToxic;

}

public class ToxicityPrediction : CommentData

{

[ColumnName("PredictedLabel")]

public bool Prediction { get; set; }

public float Probability { get; set; }

public float Score { get; set; }

}

In program.cs file Enter following using statements

using Microsoft.ML;

using Microsoft.ML.Data;

using SentimentAnalysis;

using static Microsoft.ML.DataOperationsCatalog;

Prepare Test Data

string _dataPath = Path.Combine(Environment.CurrentDirectory, "comments.txt");

MLContext mlContext = new MLContext();

TrainTestData splitDataView = LoadData(mlContext);

Console.ForegroundColor = ConsoleColor.White;

TrainTestData LoadData(MLContext mlContext)

{

IDataView dataView = mlContext.Data.LoadFromTextFile<CommentData>(_dataPath, hasHeader: false);

TrainTestData splitDataView = mlContext.Data.TrainTestSplit(dataView, testFraction: 0.2);

return splitDataView;

}

Build and Train the model using Binary Classification Trainers with following code

ITransformer model = BuildAndTrainModel(mlContext, splitDataView.TrainSet);

ITransformer BuildAndTrainModel(MLContext mlContext, IDataView splitTrainSet)

{

var estimator = mlContext.Transforms.Text.FeaturizeText(outputColumnName: "Features", inputColumnName: nameof(CommentData.Comment))

.Append(mlContext.BinaryClassification.Trainers.SdcaLogisticRegression(labelColumnName: "Label", featureColumnName: "Features"));

Console.WriteLine("------------------------------Toxic Comment Analyzer-----------------------------------");

Console.WriteLine();

Console.WriteLine("Building and Training the model...");

var model = estimator.Fit(splitTrainSet);

Console.WriteLine("End of training");

Console.WriteLine();

return model;

}

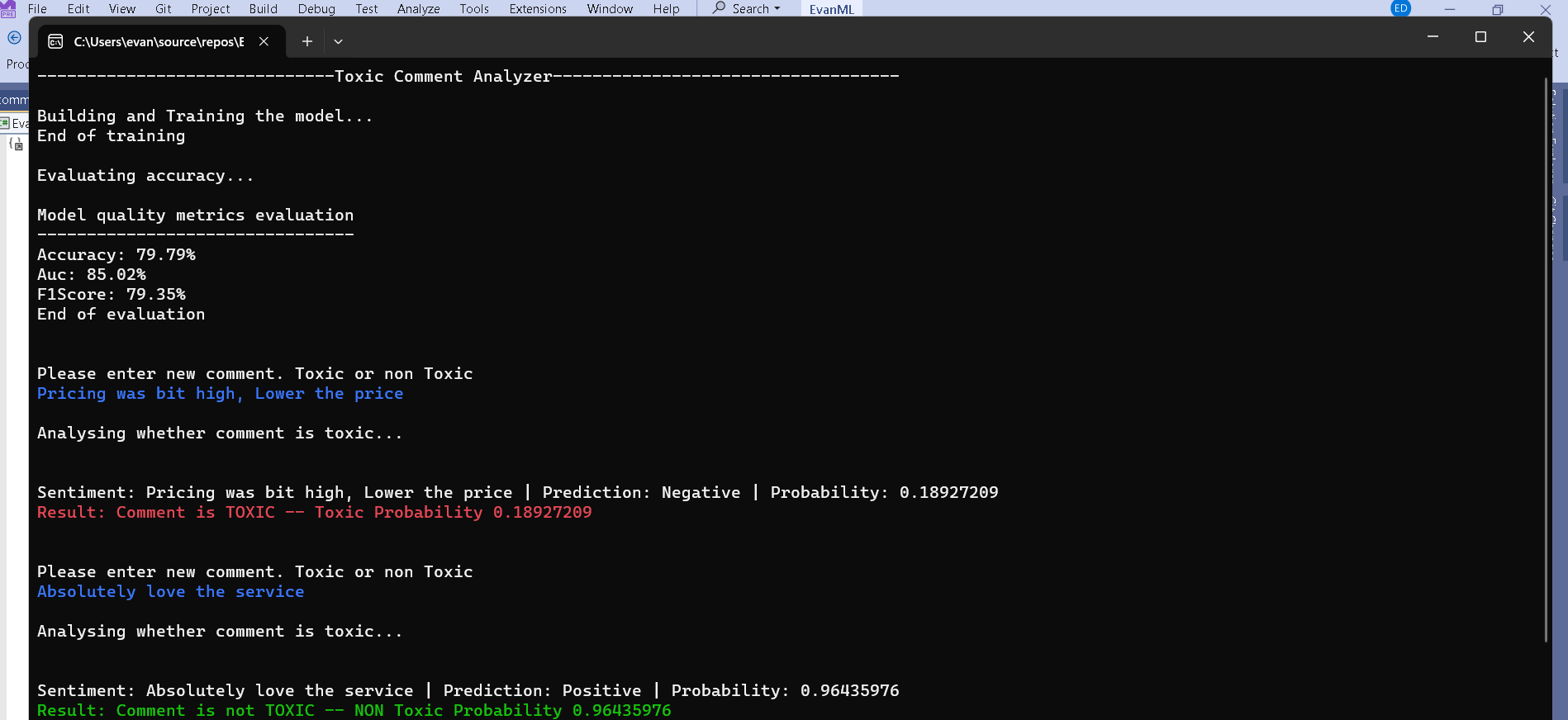

Evaluate the Accuracy of Training

Evaluate(mlContext, model, splitDataView.TestSet);

void Evaluate(MLContext mlContext, ITransformer model, IDataView splitTestSet)

{

Console.WriteLine("Evaluating accuracy...");

IDataView predictions = model.Transform(splitTestSet);

CalibratedBinaryClassificationMetrics metrics = mlContext.BinaryClassification.Evaluate(predictions, "Label");

Console.WriteLine();

Console.WriteLine($"Accuracy: {metrics.Accuracy:P2}");

Console.WriteLine($"Auc: {metrics.AreaUnderRocCurve:P2}");

Console.WriteLine($"F1Score: {metrics.F1Score:P2}");

Console.WriteLine("End of evaluation");

}

Analyze the input comment with AnalyzeComment method, During run you can pass various comments to check if it is toxic or not

AnalyzeComment (mlContext, model);

void AnalyzeComment(MLContext mlContext, ITransformer model)

{

PredictionEngine<CommentData, ToxicityPrediction> predictionFunction = mlContext.Model.CreatePredictionEngine<CommentData, ToxicityPrediction>(model);

string comment = "";

do

{

if (comment == "q")

{

break;

}

Console.ForegroundColor = ConsoleColor.White;

Console.WriteLine("\nPlease enter new comment. Toxic or non Toxic");

Console.ForegroundColor = ConsoleColor.Blue;

comment = Console.ReadLine()!;

CommentData sampleStatement = new CommentData

{

Comment = comment

};

var resultPrediction = predictionFunction.Predict(sampleStatement);

Console.WriteLine();

Console.ForegroundColor = ConsoleColor.White;

Console.WriteLine("Analysing whether comment is toxic...");

Console.WriteLine();

Console.ForegroundColor = ConsoleColor.White;

Console.WriteLine($"Sentiment: {resultPrediction.Comment} | Prediction: {(Convert.ToBoolean(resultPrediction.Prediction) ? "Positive" : "Negative")} | Probability: {resultPrediction.Probability}");

if (resultPrediction.Prediction)

{

Console.ForegroundColor = ConsoleColor.Green;

Console.WriteLine("Result: Comment is not TOXIC -- NON Toxic Probability {0}",resultPrediction.Probability);

}

else

{

Console.ForegroundColor = ConsoleColor.Red;

Console.WriteLine("Result: Comment is TOXIC -- Toxic Probability {0}", resultPrediction.Probability);

}

} while (comment != "q");

Console.WriteLine();

}

In conclusion, by leveraging the power of machine learning and the Calibrated Binary Classification Metrics module, we've developed a robust C# console application capable of detecting toxic comments with high accuracy and reliability. This technology holds tremendous potential for fostering safer and more inclusive online communities, where users can engage in meaningful discourse without fear of harassment or abuse.

By continuing to explore and innovate in the field of machine learning, we can pave the way for a brighter, more welcoming digital future. Let's harness the power of technology to build a better world for all.

Enter your email to receive our latest newsletter.

Don't worry, we don't spam

zenstack

machine-learning

Explore the process of deploying a serverless API with Vercel, leveraging Zenstack for Prisma integration, TypeScript for enhanced development, and Neon's complimentary PostgreSQL database for your project. -by Evan Dangol

Unveiling Machine Learning's Power- Detecting Toxic Comments with C# -by Evan Dangol

It's unlikely that gRPC will entirely replace REST (Representational State Transfer) API in the near future, as both technologies have their own strengths and are suitable for different use cases.gRPC (Google Remote Procedure Call) is a high-performance...